It is quite useful when re-arranging the dimension of the tensor before feeding it to the network. Permute(*dims) is used to re-arrange the dimensions of a tensor. It can only be called directly on the tensor.Ĭan be called using torch.reshape() or can be called directly on the tensor. If the original array is not contiguous, it creates a new tensor that is contiguous and returns it. Returns an error if the original array is not contiguous. Otherwise, the data is stored in a new tensor. Shares the same underlying data with the original tensor if a view is returned. Shares the same underlying data with the original tensor. Returns a view of the array is contiguous else returns a copy of the tensor. These differences are compared in the table below. And both return an error when it is just not possible to return a tensor of the desired shape, there are a few differences between the two functions. While both, view() and reshape() return a tensor of the desired shape if it is possible. For this reason, when the data in the original tensor tensor_1 is changed, the data of tensor_3 remains unchanged. tensor_3 is not a view of tensor_1 and hence does not share data with it. Note– Notice how calling the reshape() function on a non-contiguous tensor tensor_2 creates a new tensor tensor_3. # Calling the reshape() function on a non-contiguous tensor

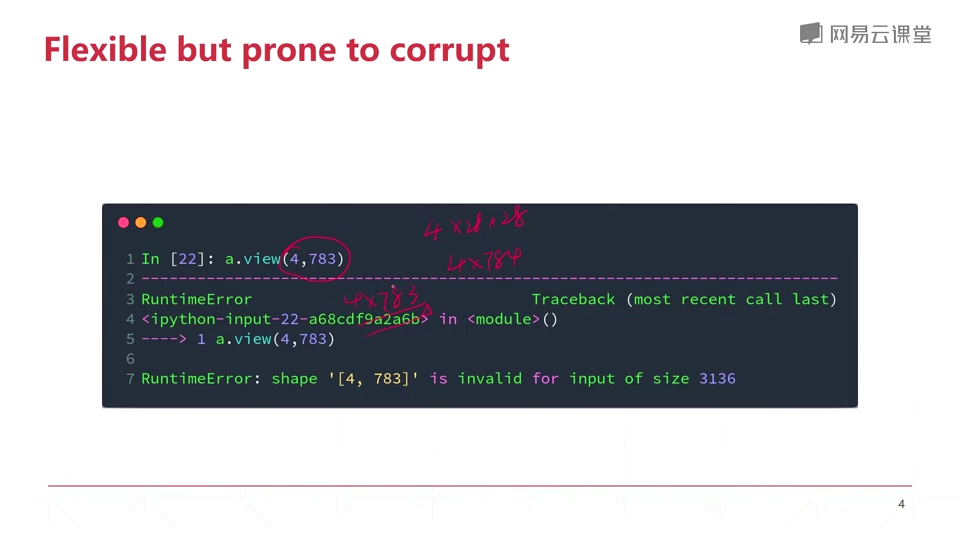

ExampleĬalling reshape() function on a non-contiguous tensor will return a new tensor. Note– Notice when the tensor is contiguous, a view is returned. # Create tensor_2 from tensor_1 using reshape() function Reshape() can also be called directly on the tensor. Hence, the reshape function might return a view of the original tensor or create a copy of the tensor. Otherwise, it will create and return a copy of the tensor with the required shape. Reshape() function will return a view of the original tensor whenever the array is contiguous(or has contiguous strides). However, the number of elements in the new tensor has to be the same as that of the original tensor. Torch.reshape(x, (*shape)) returns a tensor that will have the same data but will reshape the tensor to the required shape. # tensor_contg is a contiguous tensor created from tensor_2 Now you can use the view() function on the new tensor. You can create a new contiguous tensor from the original tensor using the contiguous() function. # Outputs- RuntimeError: view size is not compatible with input tensor's size and stride (at least one dimension spans across two contiguous subspaces). # This makes the new tensor(tensor_2) non-contiguous # Storing the transpose of tensor_1 in a new tensor ExampleĬalling view() function on a non-contiguous tensor will return an error. Hence, tensor_2 shares the same data with the original tensor tensor_1. Note– Notice that changing the data of the original tensor changes the data of the new tensor created by using the view() function. Any changes to the data of the original tensor will always be reflected in a view of the tensor. Therefore, view() will never return a copy of the data. The returned tensor will be in the required shape but it will share the data with the original tensor. However, the number of elements in the required view of the tensor should be equal to that of the original tensor. View(*shape) when called on a tensor returns a view of the original tensor with the required shape. In this chapter of Pytorch Tutorial, you will learn about tensor reshaping in Pytorch. Pytorch has in-built functions for tensor reshaping. Permute is quite different to view and reshape: # View vs.Tensor reshaping is one of the most frequently used operations for data preparation and model training. # Reshape works on non-contugous tensors (contiguous() + view) Have a look at this example to demonstrate this behavior: x = torch.arange(4*10*2).view(4, 10, 2) See () on when it is possible to return a view.Ī single dimension may be -1, in which case it’s inferred from the remaining dimensions and the number of elements in input. Contiguous inputs and inputs with compatible strides can be reshaped without copying, but you should not depend on the copying vs.

When possible, the returned tensor will be a view of input. Returns a tensor with the same data and number of elements as input, but with the specified shape. Reshape tries to return a view if possible, otherwise copies to data to a contiguous tensor and returns the view on it.

0 kommentar(er)

0 kommentar(er)